General best practices

How not to use GPT

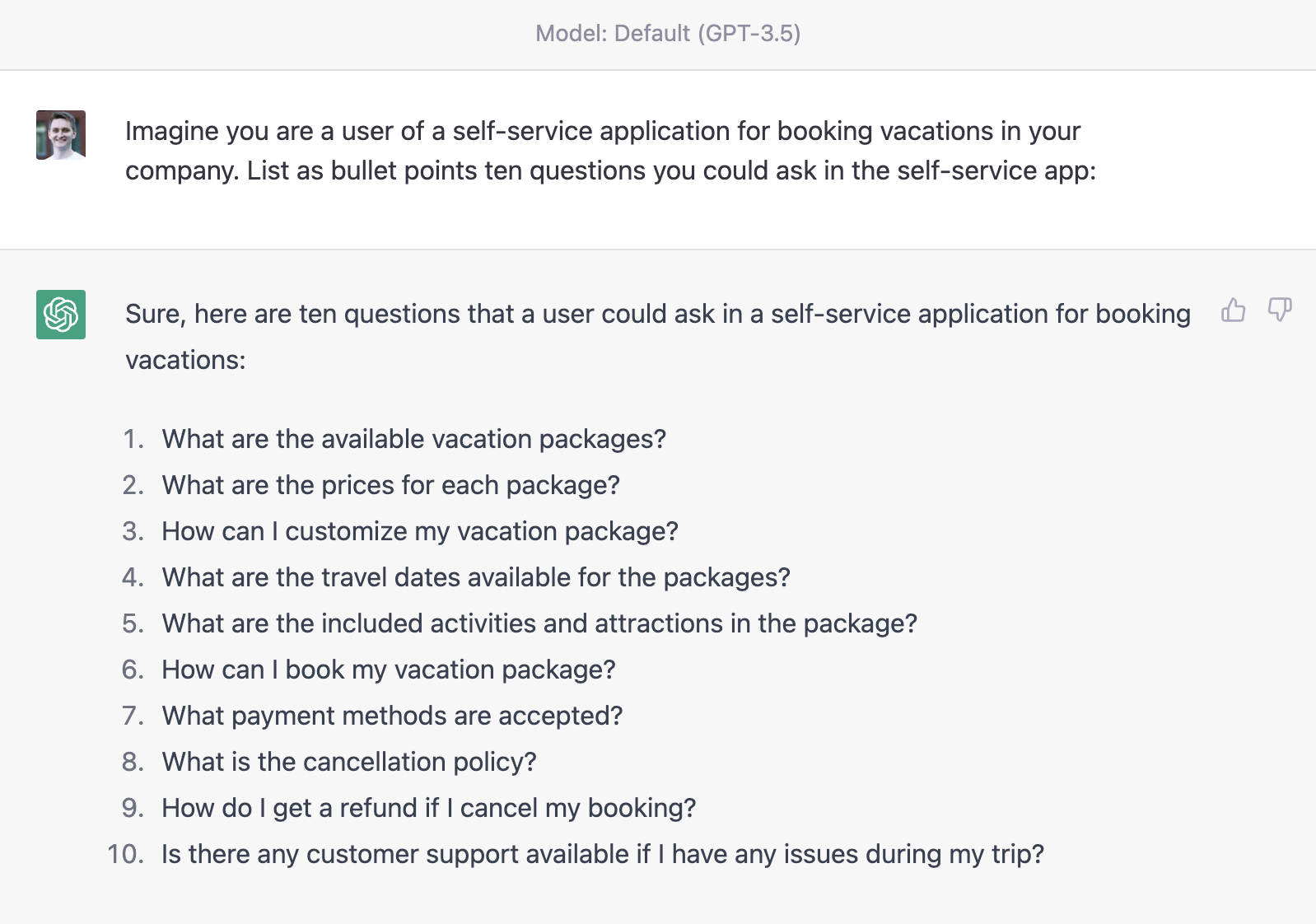

The first big pitfall that you definitely must avoid (especially in Europe) is related to what you communicate with GPT. Consider the following scenario:

ACME GmbH is a German insurer that offers occupational disability insurance. Now one of their customers, Max Mustermann, had a terrible accident at work in which he lost both arms. He is now filing a claim to get the money from his insurer.

This is highly sensitive data, which ACME can’t simply share with OpenAI and thus can’t send the data to ChatGPT.

Shortly after the release of GPT-4, a couple of open-source alternatives emerged, some of which have commercial licenses. We’ll look into this below. As of the date this section is written, these open-source alternatives are weaker than ChatGPT. However, you can significantly improve the performance of your model via fine-tuning, which we’ll cover in a later subsection.

Even in the case of sensitive data, an often overlooked area is to use GPT for data generation. This is extremely helpful when you’re trying to prototype a use case for which you have little data available. In this case, you rather tell GPT how the data should look like, and let it generate samples for you, which you can then use to train other models in e.g. a self-hosted environment. We’ll dive into this in a later section.

Open source alternatives

In case GPT can’t be used for your use case, you can look into its open-source alternatives, which you can host on your premises.

However, caution should be exercised when using open-source alternatives, as some models may not truly be open-source. For instance, some models may only be available for academic or non-commercial use, which means that companies may not be able to use them for their use cases. Therefore, it is important to carefully read the licensing agreements of open-source models before use.

Fortunately, there are open-source licenses available for commercial use, such as the Apache License 2.0. These licenses allow users to use, modify and distribute open-source software for commercial purposes, provided that they adhere to the terms and conditions of the licenses.

While open-source models can provide a solid foundation, they often require fine-tuning to achieve optimal results. We’ll go into deeper detail in the later sections.

For now, great open-source alternatives include:

For prototyping with GPT, we highly recommend testing out GPT4All-J, since it is the most efficient model. You can run it on a Macbook M1 device, i.e. require no expensive GPU capacities.

Taking the use case perspective

One of the best things you can do when sketching a use case is to understand which subtasks there are in your process. Yes, GPT is powerful and can cover a wide variety of tasks, but that doesn’t mean you should let GPT handle everything. Let’s dive into the following example:

A user sends a question to the support team, asking about the status of his current shipment for the product “ACME monitor”.

How can you tackle this use case? Let’s dive into this by thinking of the potential inputs and outputs.

Providing input for GPT

Let’s take a few perspectives (we’ll cover the technicalities in the lower section):

- If you just send this question to ChatGPT right away, it will create a response, but not a useful one. Of course, how could it know the status of something internal?

- So what we’ll do instead is to first extract key information out of the query which we hopefully can use to identify the shipment of the customer in our warehouse system. For instance via email, shipment number or something similar. We feed the information we get from our system into the prompt, such that ChatGPT can provide us with a contextualized answer.

- One step that we can add further is to use something like an FAQ, i.e. data about the products we have. For instance, “ACME monitor” could be well known to have longer shipment times. Via a vector similarity search approach, we can find further contextual facts for our query, and send it to ChatGPT. This further improves the result of our answer.

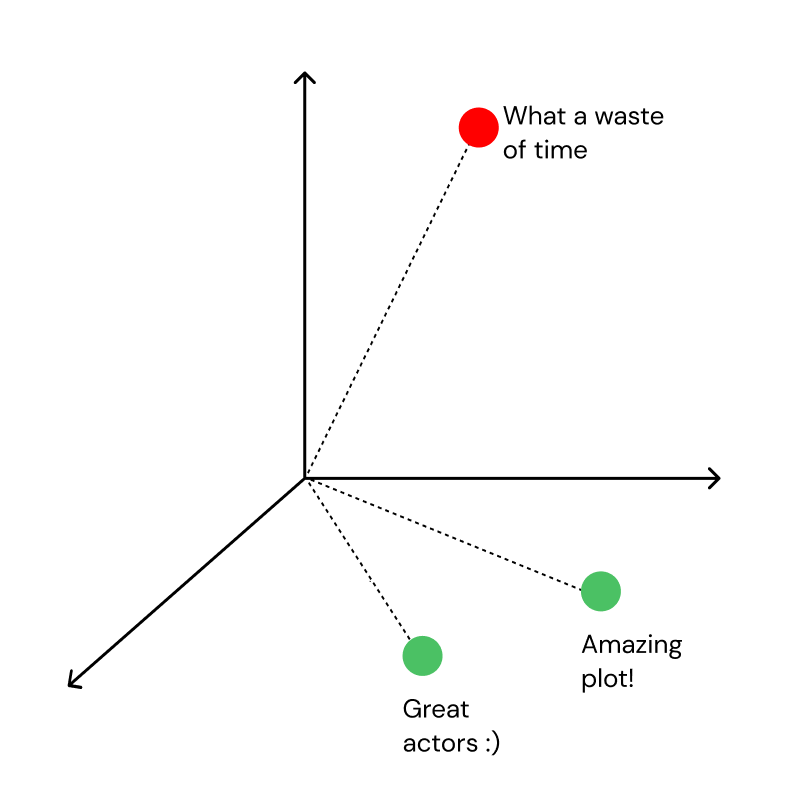

Vector databases and why they are the “memory” of AI

In the preface, we covered the “why now” for modern natural language processing. In general, encoders are capable of generating vector representations of text paragraphs that contain their semantic meaning. This means that you can index a database by its actual meaning, not just its keywords.

If a user now asks a query, a large language model can fetch the best fitting facts for a given query by vectorizing the user question, and then searching for similar content in a vector database. The retrieved facts can then be used in prompt engineering to get better results.

You can think of these vector databases as the “memory” of large language models.

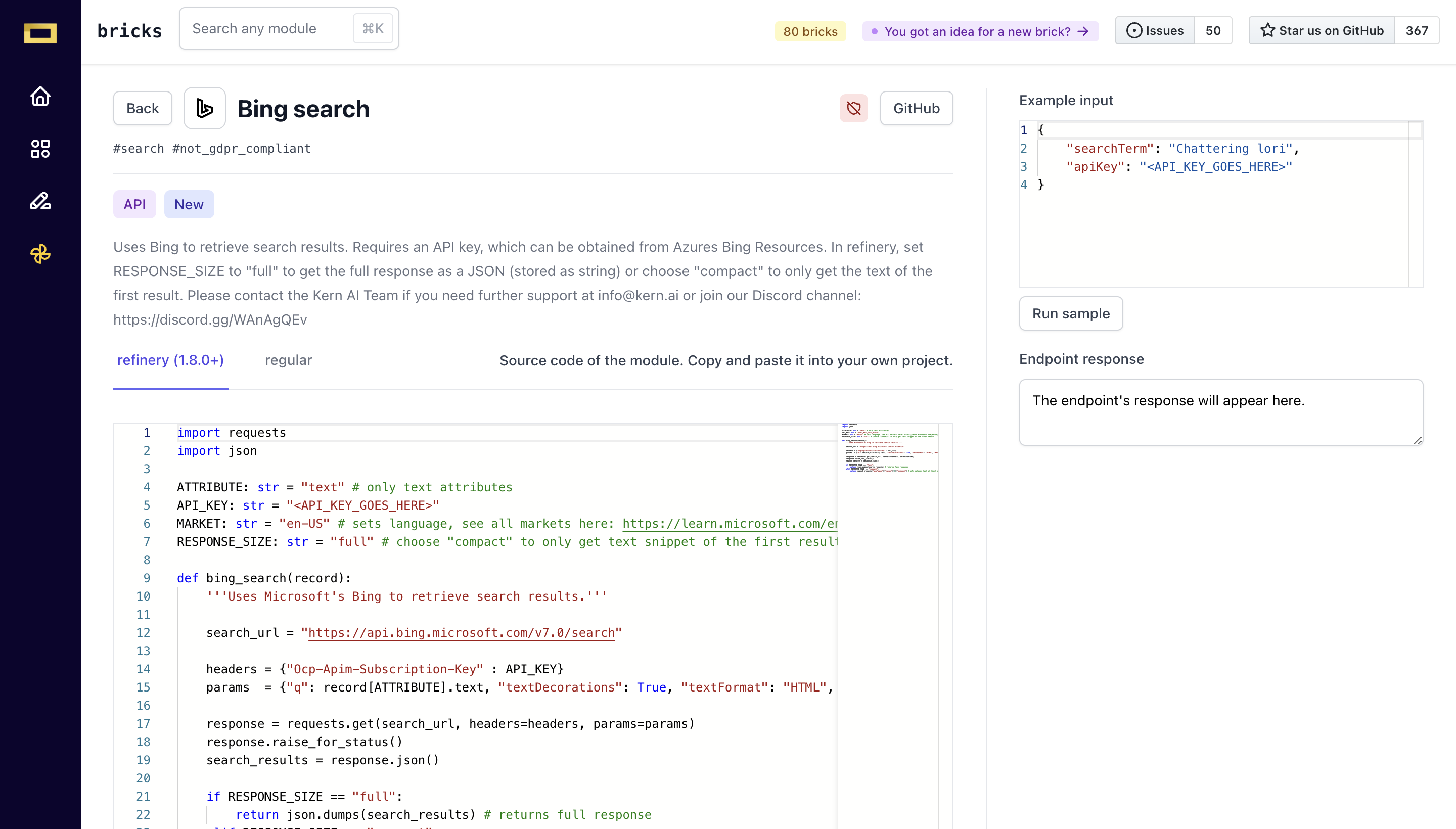

Agents: attach GPT to the web via search engine result pages (SERP)

Further, you can not only build the memory of an AI, you can also allow it to browse the web. If you're working on a personal project and need help researching certain topics, GPT can be a valuable tool. What you need to ensure is that you give GPT access to a search engine, i.e. need to put the output of GPT into a serp engine API, and then use the output to feed back as context into GPT.

Once you have your list of action items, you can save them in a database and ask GPT to research each one using a search engine or other database of your choice. With the information it finds, GPT can compile a collection of resources and summarize them for you, making it easier to tackle your project.

PDF data extraction

Quite often in the context of internal processes, you’ll be working not with machine-readable content, but with PDF scans. Each document type (e.g. invoice, contract, paper) comes with its own layout specialities, so you’ll have to solve this issue before applying natural language processing. At Kern AI, we’re currently developing another developer tool that helps to quickly extract content from text-heavy documents. In general, we can recommend checking out multiple OCR engines, which you’ll require for parsing the text from documents, no matter their type.

PII data and how to detect it

If you want to detect PII in your data, you must do this before sending data to GPT. You can either pick a vendor that suits your requirements (e.g. an ISO 27001-certified provider like Kern AI), or you can build a PII detector in-house.

If you’re building a model in-house, make sure to look into the below sections on weak supervision, which is a framework helping you to combine different algorithms into a single prediction, which is helpful in reliably finding PII. A great resource for this is also bricks, our open-source collection containing off-the-shelf PII identifiers.

As soon as you have a reliable PII detector, you can leverage it to make requests to the ChatGPT API with anonymized prompts. Keep in mind that most likely, no PII detection will be 100% accurate, such that for highly sensitive data, you still need a human-in-the-loop process. An alternative is to host an open-source alternative yourself, which you can finetune.

Working with the output

Now that you have some input ready for GPT, it is also incredibly important to understand how you want to process the output. Let's first dive into the possible types of outcomes: (a) classifications, (b) extractions, (c) generations and (d) ranking.

Classifications

Let's say you want to categorize your incoming emails into topics. If you know which categories there are, list them in your prompt (e.g. “Act as a classifier, and your response is limited to one of the following classes: ['positive', ‘neutral', ‘negative']' if you want to find the sentiment). If you don't know them yet, at least provide some framework to ensure that you get standardized outputs (e.g. “Act as a classifier; respond in lowercase letters; if your class has more than a word, join words by hyphen”). That way, you avoid duplicates like “positive” and “good'.

Extractions

The same is true for extractions; you want to make sure that you provide a schema of the entities you want to extract. Again, let's take emails as an example: if you want to detect the customer reference in an email (if it is given), you should be precise in what you expect the model to create as an output (e.g. “If there is a customer identifier in the email such as their reference number or name, list them in a JSON like this: {"identifier":"your-output"})

Generations

This is the basic output of ChatGPT; it generates a conversation with you. If you want to e.g. have ChatGPT create a memo or reasoning for you, ensure that you are providing some framework for parsing. For instance, tell it to separate “thoughts' via bullet points (e.g. “Is the claim of the client valid? List pro-reasons line-by-line with a leading ‘+', and contra-reasons with a leading ‘-'').

Ranking and Similarity

Another great application of GPT is to use it for comparing two (or more) objects by similarity. Let's consider again the claims processing use case: given a new claim, we can try finding similar claims we processed in the past to support experts in their decision process. Also, this can help to come up with more consistent decisions.

For ranking and similarity, we recommend a different approach than GPT. In the “why now' part of the preface, we covered embeddings. If you've computed the embeddings for your objects, you can easily apply technologies such as vector similarity search. See our section on further resources for more details on this.

Generally, classifications, extractions and ranking/similarity can be done well even in absence of large language models. We cover in the technical best practices section how you can use GPT and other models to act as teachers, such that you can train efficient and smaller models, costing you fewer resources in production.

Validating the outputs

One of the key risks in applying ChatGPT is not worrying about its output. We’ve seen examples in which sales reps included a GPT-generated icebreaker in their outreach messages - e.g. to personalize messages via the first names, the company industry or other things.

In some examples, GPT created insulting icebreakers, e.g. by implying the skin paleness of a person given the first name - definitely not something you want to write to a stranger that you want to win as a client!

There are two ways to overcome this challenge: (a) by setting up a human-in-the-loop process - something that generally is a good idea, or (b) by implementing a postprocessing mechanism that monitors the type of content your large language model comes up with. For instance, if a classifier detects hate speech in the generated content, it shouldn’t be sent.

A great initiative that we’re following with excitement is the development of LMQL, a query language specifically designed for retrieval from large language models.

Adding authentication

If your users want to access confidential data via a chat interface, don’t forget about basic things such as authentication where necessary. For instance, before sending data from internal systems to a user, first, make sure that they can authenticate themselves if necessary. You can also use NLP techniques for this, e.g. to detect dates of birth, names, or other identifiers (again, think of the PII detection use case).

GPT is a part of the system, not the full system itself

By now, you should see that GPT very often is just part of a system, not the full system itself. When building a use case, think of different tasks as the ingredients for your use case. In some cases, GPT is all you need - but that is only in the rarest cases. In many cases, it will also be too expensive to rely on GPT all the time. We will cover different alternatives in the technical best practices section.

Understanding the levels of automation

When implementing AI, it's important to keep in mind that winning over users is just as important as the technology itself. It's not always necessary to jump from no automation to a fully automated process. Gradual improvements can often be more effective. For example, if you currently have a spam detection system in place for your inbox, this is a level 1 automation. The next step could be to classify sentiment and urgency, a level 2 automation. After that, you could try to create automated drafts for incoming responses for very simple categories, a level 3 automation. In level 4, you could integrate the AI with internal IT systems containing relevant context, such as the status of a shipment the customer is inquiring about, and create a draft response with that information. Finally, in level 5, you could fully automate everything, where messages are sent without requiring any human input.

Understanding the longevity of data

With more and more powerful models available every month, we want to emphasize the longevity of data. Again, let’s take a simple comparison.

GPT-3 was released in June 2020. ChatGPT was released in November 2022. GPT-4 was released in March 2023. The Hugging Face hub, an open-source collection of models similar to GPT, contains more than 100,000 models, growing at an immense speed. Algorithms are commoditized in open-source frameworks such as PyTorch.

Data is created in your processes. Your customer services department collects requests and tickets every week. An insurer collects an abundance of claims every year. But in contrast to the commoditized models, data is proprietary and custom to the nature of their underlying processes.

If you want to integrate AI into your process, you have two components to make it strong: choosing a good model and training this model on your training data. For unstructured data - the area of Deep Learning - it is now common sense that for applied AI, you should focus on building a strong set of training data. This is the lever you can use to make sure that a generalistic AI works reliably on your process.