Risks and obstacles

Integrating large language models as an enterprise can bring significant benefits, but there are also risks and obstacles to consider. Here are some of these, and we’ll keep updating the list.

Risks

Below are some of the potential risks a company faces when integrating LLMs.

Privacy and security concerns

Large language models require access to a large amount of data, which can include sensitive or confidential information. This creates the risk of data breaches or unauthorized access to sensitive data. You can overcome this risk by deploying an LLM on-premises, e.g. an Apache 2.0 licensed model, which is fine-tuned to your own data. We’ve shown different approaches for this in the best practices.

Biases and ethical concerns

Large language models can inadvertently perpetuate biases or reinforce existing societal inequalities. This can lead to ethical concerns around fairness, justice, and discrimination.

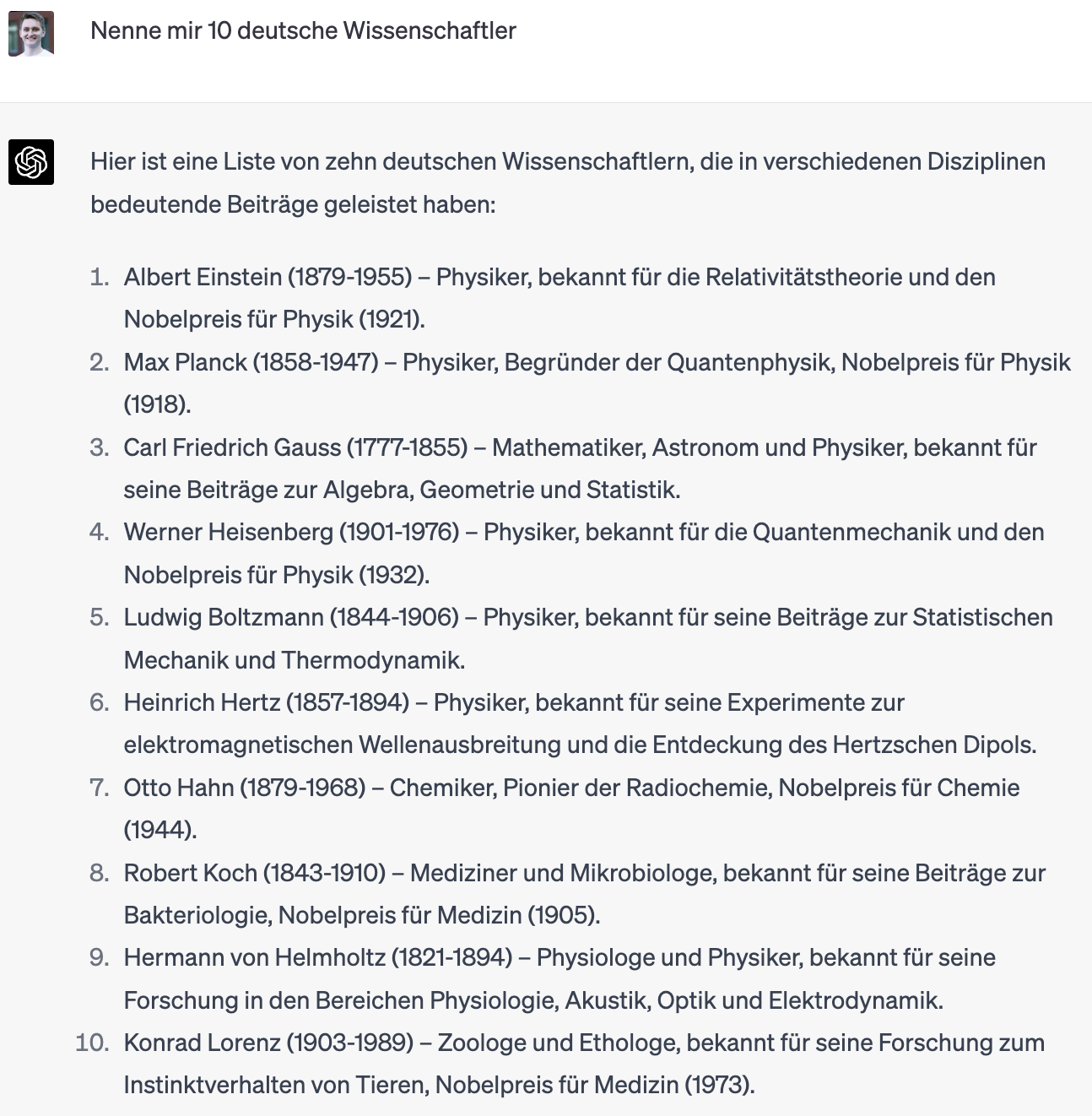

Take this German example for instance; in the first query, I ask about German scientists (”Wissenschaftler”). By default, this is the male version, but many people in Germany are used to always apply the male version of a word. For our query, we only get male scientists:

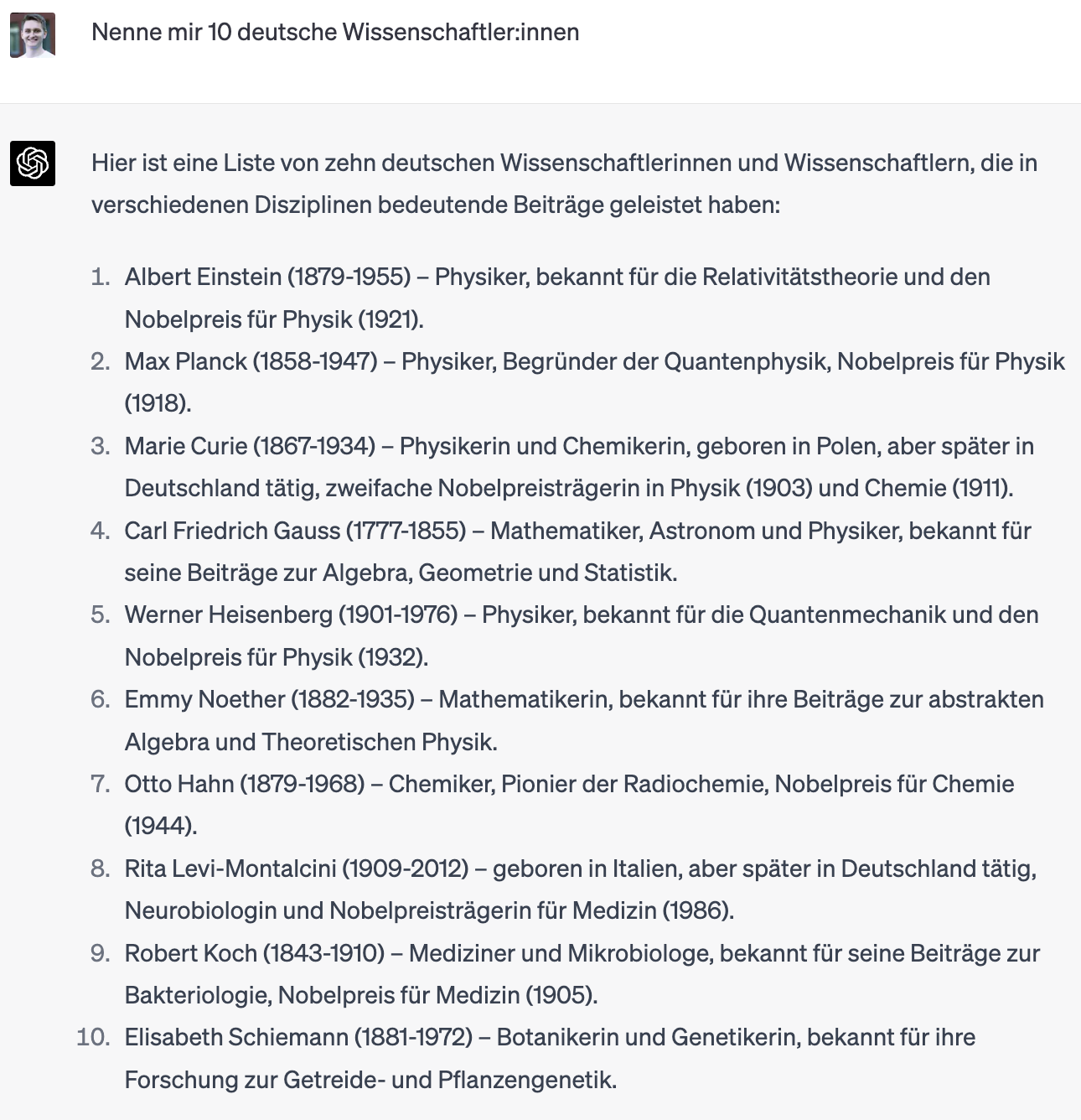

Take Now, let’s rewrite the query. By adding a colon in the word, we add a gender differentiation into the query, showing ChatGPT that we want to have both groups included in our question. The result looks better: German example for instance; in the first query, I ask about German scientists (”Wissenschaftler”). By default, this is the male version, but many people in Germany are used to always apply the male version of a word. For our query, we only get male scientists:

Technical limitations

Large language models are complex and require significant computing resources. Integrating them into an enterprise system can be technically challenging, requiring specialized expertise and infrastructure. We’ve covered multiple ways how to deal with this in the technical best practices.

Considering GPT as the full system, instead of just a part

Also listed before, but to emphasize its relevance: you shouldn’t confuse GPT with an API that you call for every task in your process. It’s a tool - a really powerful one - but for many reasons, it is worthwhile to think about which tasks you have in your process and which tools might fit best to solve them. Also, keep in mind that what you feed into GPT, and how you process the outputs, are incredibly relevant.

Obstacles

Below is a set of challenges to face during the development and integration of large language models.

Integration with existing systems

Integrating large language models into existing enterprise systems can be challenging. This requires careful planning and coordination to ensure compatibility and avoid disruptions to existing workflows.

Expertise and training

Large language models are a relatively new technology, and many enterprises may not have the necessary expertise to fully utilize them. This requires investment in training and education for employees to ensure they can effectively use and manage these models. Kern AI can help with this.

Cost

Integrating large language models into an enterprise system can be expensive. This includes not only the cost of the models themselves but also the infrastructure and expertise required to support them. We’ve shown ways to leverage this in the technical best practices section.

Jailbreaks

Jailbreaking refers to the practice of modifying a system's default configuration to gain access to features that are not normally available to users. In the context of GPT, jailbreaking can involve altering the model's parameters, architecture, or training data to achieve better performance or to customize the model for a specific use case. While jailbreaking can be a tempting approach, it comes with several risks. One of the most significant risks is that jailbreaking can cause the model to become unstable or produce unintended results. Additionally, jailbreaking may result in the model becoming less interpretable, making it difficult to understand how the model is making its predictions. Finally, jailbreaking can also have legal implications as it may violate the terms of service of the software provider. As such, it is important to carefully consider the potential risks and benefits of jailbreaking GPT before attempting any modifications.